|

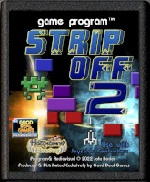

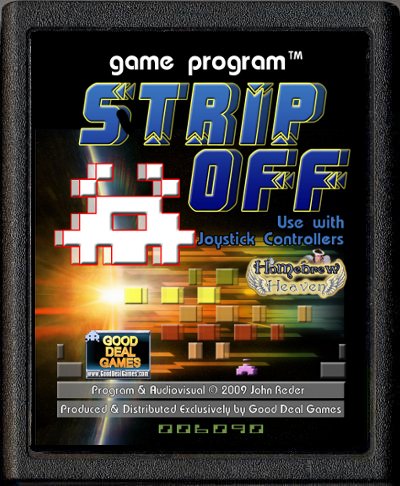

Strip-Off 2 for the Atari 2600 Console |

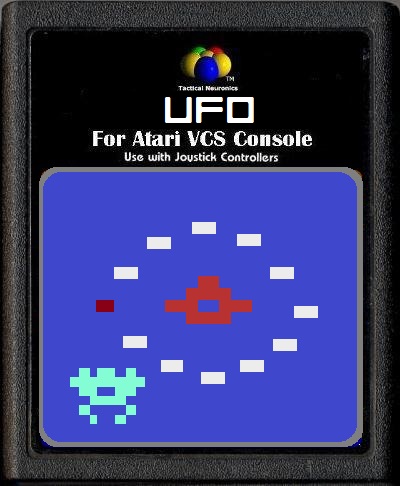

ATARI 2600 GAMES BY TACTICAL NEURONICS

CLICK ATARI 2600 CART TO PLAY ONLINE!

Atari 2600 ROM (.bin file) for PC emulation or

writable cartridge play.

FREE DOWNLOAD

Click here to play it Online!

Game Cartridge Available Soon

from Good Deal Games

|

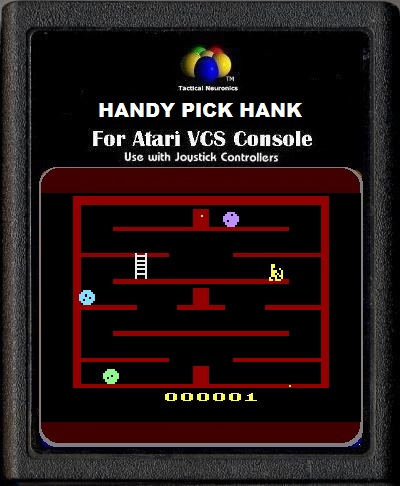

SCREEN SHOTS

Talk about

Strip Off 2 for the Atari 2600 here

FEATURE LIST

16 Game Modes to choose from! This is a 1 player game where you try to keep the alien from stealing all of your planetary barrier components.

You'll need to run it with a

Atari 2600 emulator or install it on a

writable cartridge for use on Atari 2600 console.

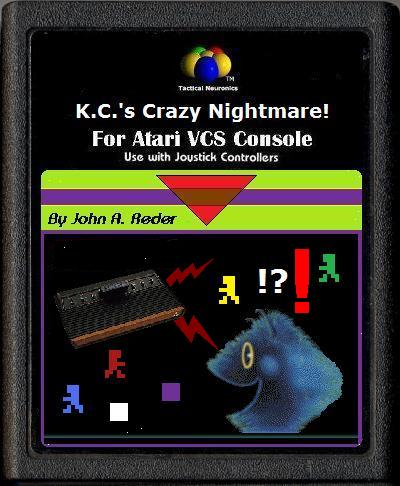

Strip Off 2 2017 by John A. Reder - The idea was derived from the old videogame Rip Off. I mixed it with some blockout and space invaders and this game was born.

Objective:

The game ends when all of your barrier has been stripped away by the alien or you are shot by the aliens reflected shot on Advanced mode. You score 1 point for each alien destroyed.

When you are down to 10 barrier chunks on beginner or 25 barrier chunks on advanced the alien doubles in speed! Your barrier gets replenished every 20 points at first but increases by 2 for each two levels cleared.

|

Fortress of Flags

GameBoy Advance

Atari

Vectrex

Adventure Stidio

Retired Games

One-Switch

Home Arcade

Articles

|

Get the ROM file here version - 20170205

Get the ROM file here version - 20170205